5. Differentiation and Searching#

Numerical differentiation in really straightforward, where we will evaluate the forward, central, and extrapolated-difference methods. Another tool is trial-and-error searching which can be used in root-finding, minimization, and is widely used to solve problems where analytic solutions do not exist or are impractical.

5.1. Numerical differentiation#

Before implementing a numerical method for differentiation, let’s review some basics from Introductory Calculus. The elementary definition was

but this is impractical to implement in a computer. The denominator is approaching zero and the finite machine precision causes oscillations in the solution. Therefore we implement a series of approximations to obtain a practical solution.

5.1.1. Forward difference#

The most direct method for numerical differentiation starts by expanding a function in a Taylor series to obtain its value a small step h away:

We obtain a forward-difference derivative by using the approximation in the elementary definition for \(y^\prime(t)\):

An approximation for the error follows from substituting the Taylor series:

You can think of this approximation as using two points to represent the function by a straight line between \(x\) and \(x+h\). The approximation has an error proportional to \(h\) (the slope of the line). Therefore, we can make the approximation error smaller by taking smaller steps (decreasing \(h\)), yet there will be some round-off error that is present from the subtraction in the forward-difference derivative. To see how this algorithm works, let \(y(t) = a + bt^2\), where the exact derivative is \(y^\prime = 2bt\) and the computed derivative is:

This approximation is only good for small h, where the \(bh \ll 1\). Implementing the forward-difference algorithm in python is super easy. Simply use the diff function from numpy. Suppose you have an array of N values in y, then np.diff(y) returns an array with length N-1 containing the consecutive differences (i.e., y[1]-y[0], y[2]-y[1], …). If the values are sampled at regular intervals in the t array, then \(y^\prime(t)\) = np.diff(y)/(t[1]-t[0]).

5.1.2. Central difference#

An improved approximation uses the principles from the Taylor series (i.e., expand relative to a central point). Now, rather than making a single step of h forward, we form a central difference by stepping forward a half-step and backward a half-step:

We estimate the error by substituting the Taylor series for \(y(t\pm h/2)\). Do you think this will result in a better approximation? Why?

By substitution, we find:

and through many cancellations, we find:

and the central-difference derivative is:

The important difference between the forward-difference and central-difference algorithms is that the central-difference algorithm cancels the terms with an even power of h from each of terms in the Taylor series. This makes the leading-order error of central-difference accurate to \(h^2\), while the forward-difference is only accurate to order h. Returning to our parabola example (\(y(t) = a + bt^2\)), we see that the central-difference gives the exact derivative independent of \(h\):

The numpy module has a special function called gradient that performs a second order central differences method on the input array and requires the stepsize h as an argument. From an array with N elements \(y(t)\), it returns an array of N elements for \(y^\prime(t)\).

5.1.3. Extrapolated difference#

You may have noticed that different differentiation rules will keep a different number of terms in a Taylor series expansion, which also provides an expression for the error. We can reduce the error further by combining several algorithms together so that the summed errors extrapolate to zero. For example, we could employ two central-difference algorithms as a linear combination, where the first algorithm takes half-steps and the second takes quarter-steps. Let’s look at the quarter-step approximation (\(h\rightarrow h/2\)):

The quarter-step derivative produces a factor of 1/96 instead of the 1/24 on the second order term. If we want to cancel this term, we realize that \(96 = 4*24\) and so we perform the following subtraction to get:

Thus, we find for the extrapolated-difference the following:

5.2. Second Derivatives#

Many problems in physics involve a second derivative, or acceleration. One of the most important equations is Newton’s second law that tells us that a force \(F\) and acceleration \(a\) are linearly related:

where \(m\) is a particle’s mass and the acceleration can be expressed as the second derivative of a function \(y(t)\). If we can determine the acceleration, then we can determine the force. Using the central-difference method for the first derivative, we have:

which gives the derivative at t by moving forward and backward from t by a half-step \(h/2\). We take the second derivative by applying the same operation again as follows:

5.3. Root Via Bisection#

Trial-and-error root finding looks for a value of \(x\) at which \(f(x)=0\), where the \(f(x)\) can be polynomials or a transcendental function (e.g., \(10\sin x = 3x^3 \rightarrow 10\sin x - 3x^3 = 0\)). The search procedure starts with a guess for \(x\), substitutes that guess into \(f(x)\) (i.e., the trial), and sees how far from zero the evaluated function is (i.e., the error). the program then revises the guess \(x\) based on the error and then substitutes the revised guess into \(f(x)\) again. The procedure continues until \(f(x)\simeq 0\) or to some desired level of precision. Sometimes the procedure is stopped once it appears progress is not being made.

The most elementary trial-and-error technique is the bisection algorithm. It is reliable, but slow. If you know some interval in which \(f(x)\) changes sign, then the bisection algorithm is the way to go. The basis of the bisection algorithm is that we start with two values of \(x\) between which a zero occurs (i.e., the bounds). Let us say that \(f(x)\) is negative at \(a\) and positive at \(b\). Thus we start with the interval \(a\geq x \geq b\) within which we know a zero occurs. Then we evaluate each of these tasks for a given iteration:

Calculate \(c\), the midpoint of the interval, \(c = \frac{a+b}{2}\).

Evaluate the function at the midpoint, \(f(c)\).

If convergence is satisfactory (i.e., \(|f(c)|\simeq 0\)), return c and stop iterating.

Examine the sign of \(f(c)f(a)\).

If negative then set \(b = c\) and return to step 1.

Else set \(a = c\) and return to step 1.

Let’s turn these steps into code:

def bisection(f,a,b,N):

'''Approximate solution of f(x)=0 on interval [a,b] by bisection method.

f : function

a,b : The interval in which to search for a solution.

N : The number of iterations to implement.'''

if f(a)*f(b) >= 0: #checking that a zero exist in the interval [a,b]

print("Bisection method fails.")

return None

#initial interval

a_n = a

b_n = b

for n in range(1,N+1):

c = (a_n + b_n)/2 #calculate midpoint

f_c = f(c) #evaluate function at midpoint

if f(a_n)*f_c < 0: #evaluate sign

b_n = c

elif f(b_n)*f_c < 0: #evaluate sign

a_n = c

elif f_c == 0:

print("Found exact solution.")

return m_n

else:

print("Bisection method fails.")

return None

return (a_n + b_n)/2

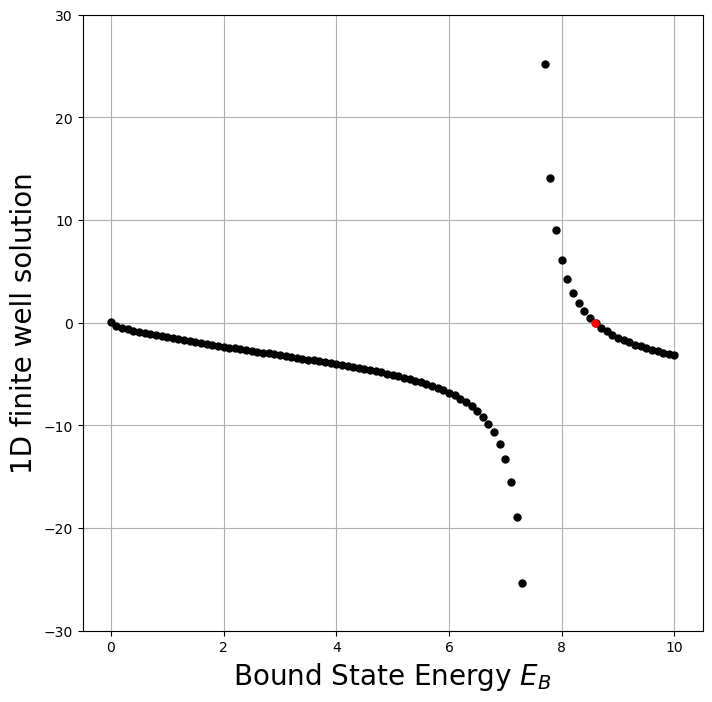

Now let’s try our function on a problem from quantum mechanics. The most standard problem is to solve for the energies of a particle of mass \(m\) bound within a 1-D square well of radius \(a\). The potential \(V(x) = -V_o\) if \(|x|\leq a\), otherwise it is zero. From quantum mechanics textbooks we know there is a solution for the energies of the bound states \(E_B\):

Here we have chosen units such that \(\hbar=1\), \(2m=1\), and \(a=1\). Now we want to find several of the bound state energies for the even wave functions. First we have to find a reasonable interval and then apply our algorithm.

import numpy as np

import matplotlib.pyplot as plt

from scipy.optimize import bisect

V_o = 10 #define the potential

def even_func(E_B):

#E_B is a guess at the ground state energy

if (V_o - E_B)>= 0:

temp = np.sqrt(V_o - E_B)

return temp*np.tan(temp) - np.sqrt(E_B)

else:

return np.nan

fig = plt.figure(1,figsize=(8,8))

ax = fig.add_subplot(111)

x_rng = np.arange(0,15,0.1)

for x in x_rng:

y = even_func(x)

ax.plot(x,y,'k.',ms=10)

root_EB = bisection(even_func,8,10,50)

scipy_root = bisect(even_func,8,10)

print("our method = ",root_EB)

print("scipy method = ",scipy_root)

ax.plot(root_EB,even_func(root_EB),'r.',ms=10)

ax.grid(True)

ax.set_ylim(-30,30)

ax.set_xlabel("Bound State Energy $E_B$",fontsize=20)

ax.set_ylabel("1D finite well solution",fontsize=20);

our method = 8.59278527522984

scipy method = 8.592785275230199

5.4. Newton-Raphson searching#

The Newton-Raphson (NR) algorithm is another way to find roots, but it is much quicker than the bisection method. The NR method is equivalent to drawing a straight line \(f(x)\simeq mx + b\) to a curve at an \(x\) value for which \(f(x) \simeq 0\). Then it uses the intercept of the line with the \(x\) axis at \(-b/m\) as an improved guess for the root. If the “curve” were a straight line, then the answer would be exact. Otherwise, it is a good approximation if the guess is close enough to the root for \(f(x)\) to be nearly linear.

As an iteration scheme, we need to start with a guess \(x_o\), then find a correction to the guess \(\Delta x\), and finally formulate a new guess \(x = x_o + \Delta x\). Recall that the equation of a line can be formulated in terms of a Taylor series, keeping on the first two terms:

We then determine the correction \(\Delta x\) by calculating the point at which this linear approximation to \(f(x)\) would cross the \(x\) axis:

Notice that the NR method requires the calculation of the first derivative \(df/dx\) at each guess. In many cases you may have an analytic expression for the derivative and can build it into the algorithm. However, it is simpler to use a numerical forward-difference approximation to the derivative. While a central-difference approximation would be more accurate, it would require addition function evaluations and the overall process would take longer to run.

5.5. Problems#

Complete the following problems in a Jupyter notebook, where you will save your results as an external file (*.png).

Create a \(\LaTeX\) document with:

an abstract summary

sections for each problem that state the problem, explain your approach to solving the problem, and display the results

include a reference for each solution (this can be textbooks)

Problem 1

Write a program to calculate sin(x) and cos(x). Calculate and plot the numerical derivatives using a) forward-difference, b) central-difference, and c) the analytical derivative.

Problem 2

Write a function to implement the Newton-Raphson method. Apply it to the 1D Quantum well example and compare it to the bisection method. Also compare your implementation to the function from scipy.optimize.